I have operated a Nextcloud instance for several years. It has completely replaced DropBox, OneDrive and even Google Drive for me. However, my single-instances of Nextcloud have occasionally had downtime (power cuts, server issues and especially ‘administrator configuration fubars’). I have experimented with a Nextcloud failover service to try to improve mmy uptime, and it’s now in ‘experimental operation’.

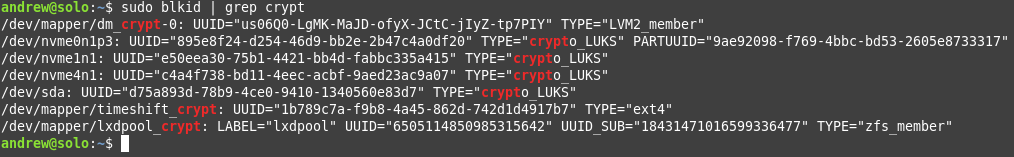

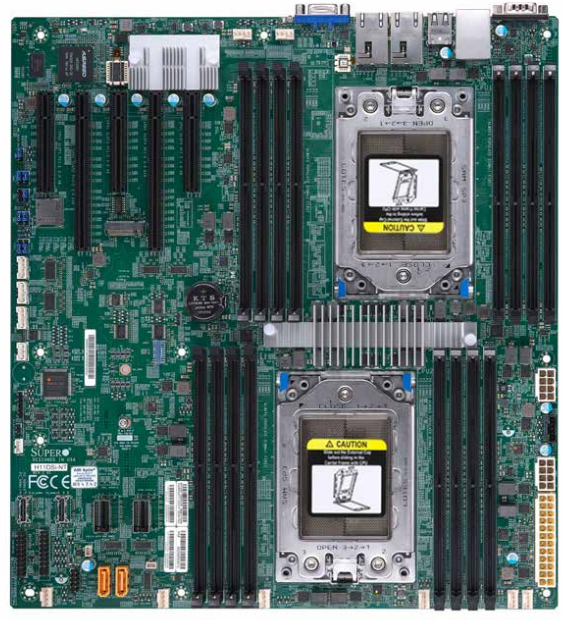

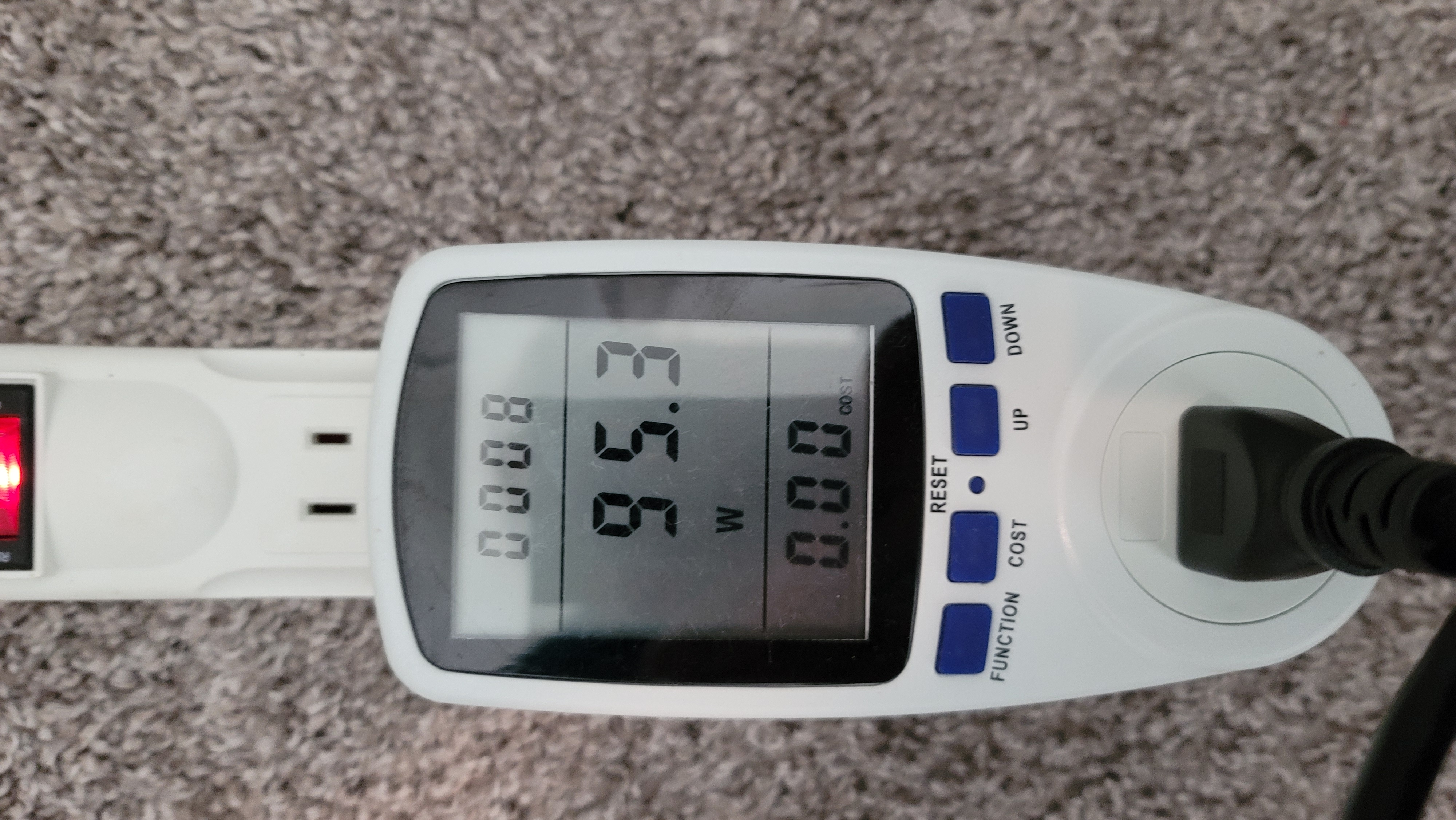

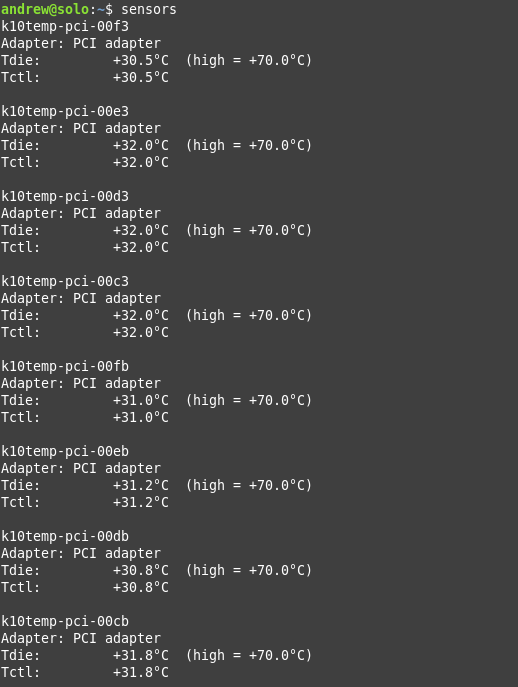

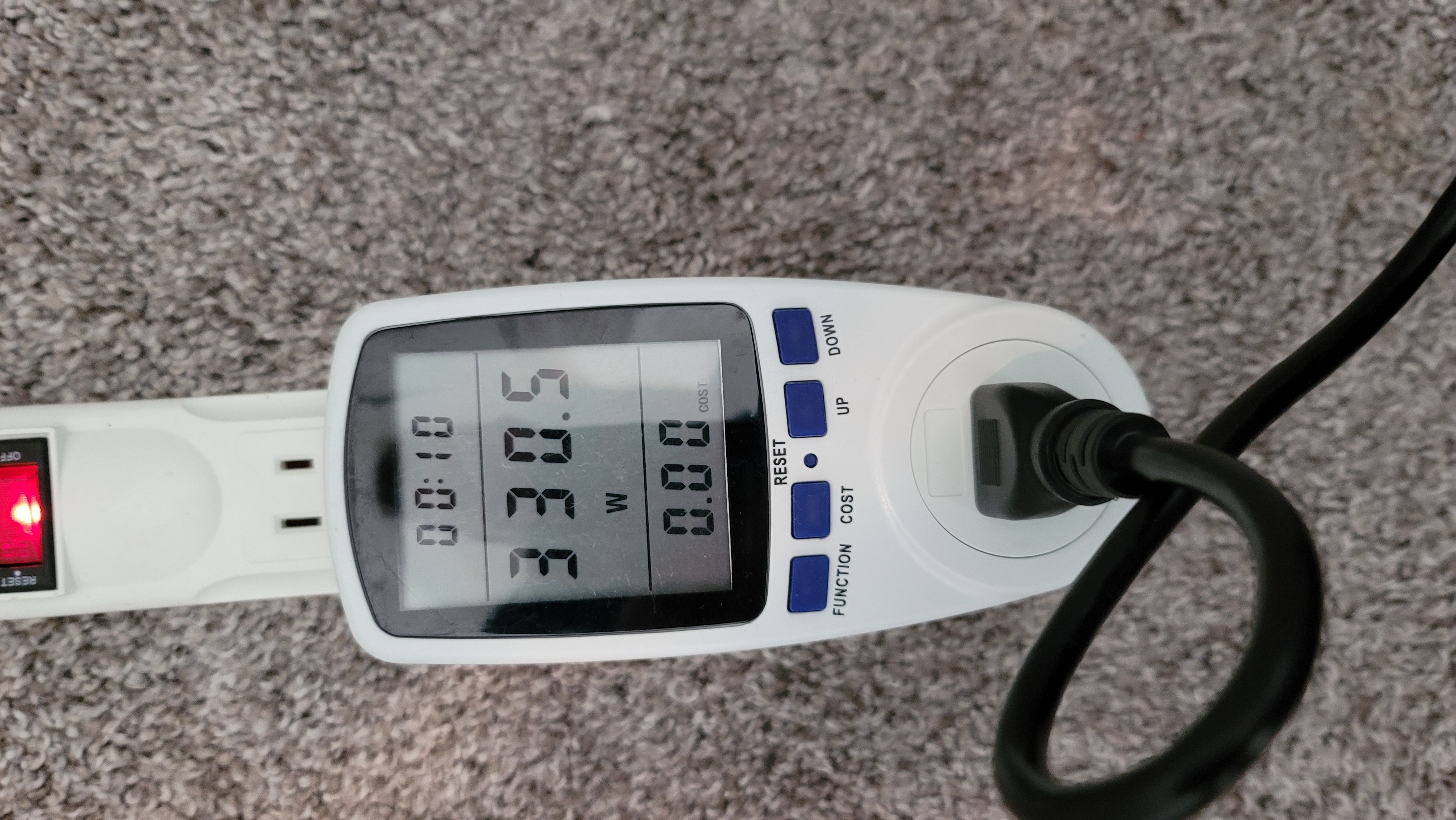

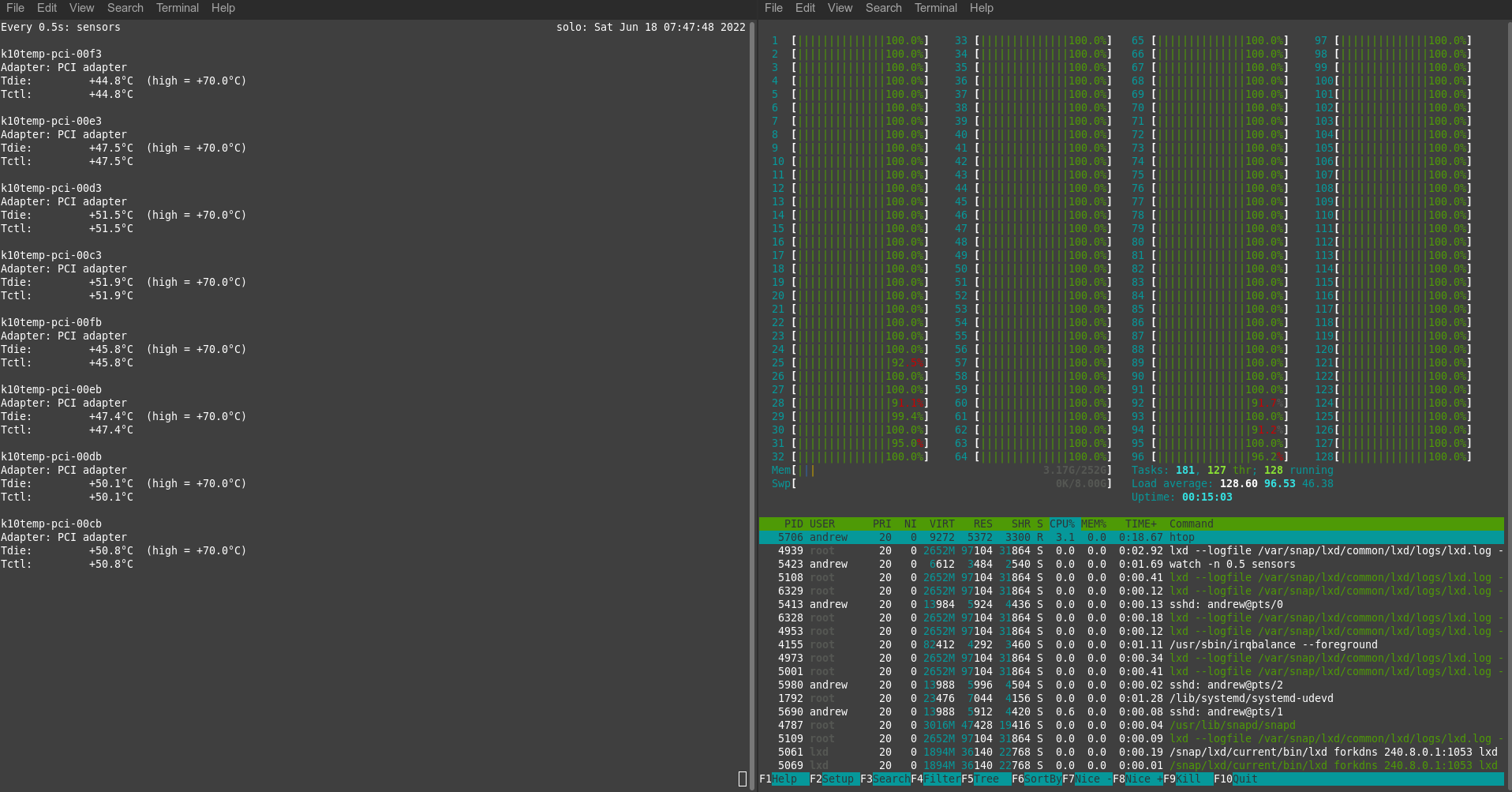

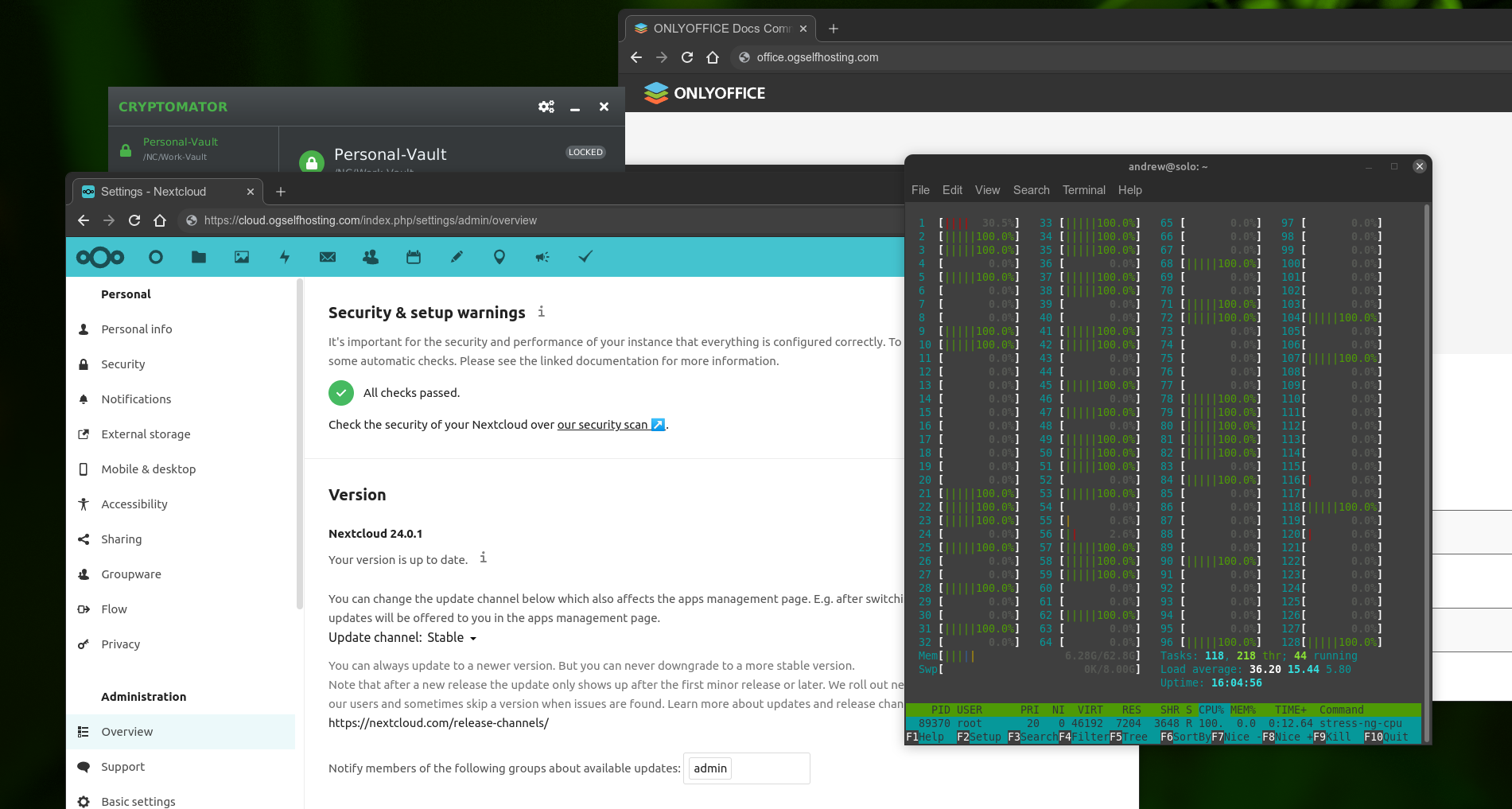

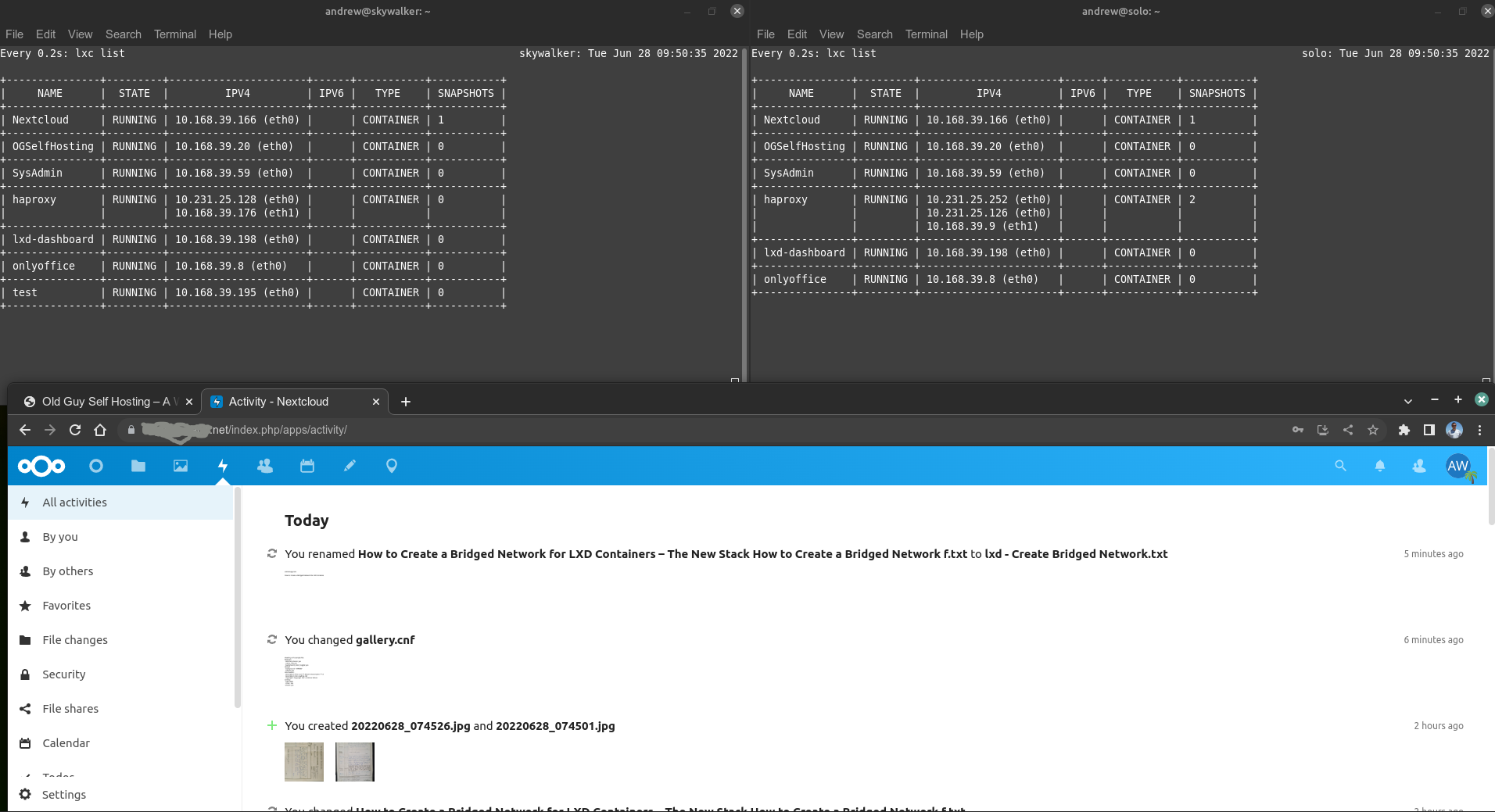

At the present time, I now have TWO instances running on two different hardware platforms. Both instances run in a virtual environment. One, running on my new dual-EPYC server, is the primary instance intended to be in operation ‘all of the time’. The other, on a purpose-built server based on consumer hardware, is a mirror of the primary instance but theoretically is always hot and able to come online at a moments notice. If my primary server goes down, the backup takes over in about 1-3 seconds.

I rely upon two key software packages to help me make this happen: (1) lxd, which I use to run all my containers and even some of my vm’s (I suspect Docker would work equally well); and (2) keepalived, which provides me with a ‘fake’ IP I can assign to different servers depending on whether they are operational or not.

I am going to run this service with just two instances (i.e. one fail-over server). For now, both services are hosted in the same physical property and use the same power supply – so I do not have professional-grade redundancy (yet). I may add a third instance to this setup and even try to place that in a different physical location which would considerably improve robustness against power loss, internet outages etc. But that’s for the future – today I just finally have some limited albeit production-grade fail-over capability. I shall see if this actually makes my reliably better (as intended), or if the additional complexity just brings new problems that make things worse or at least no-better.

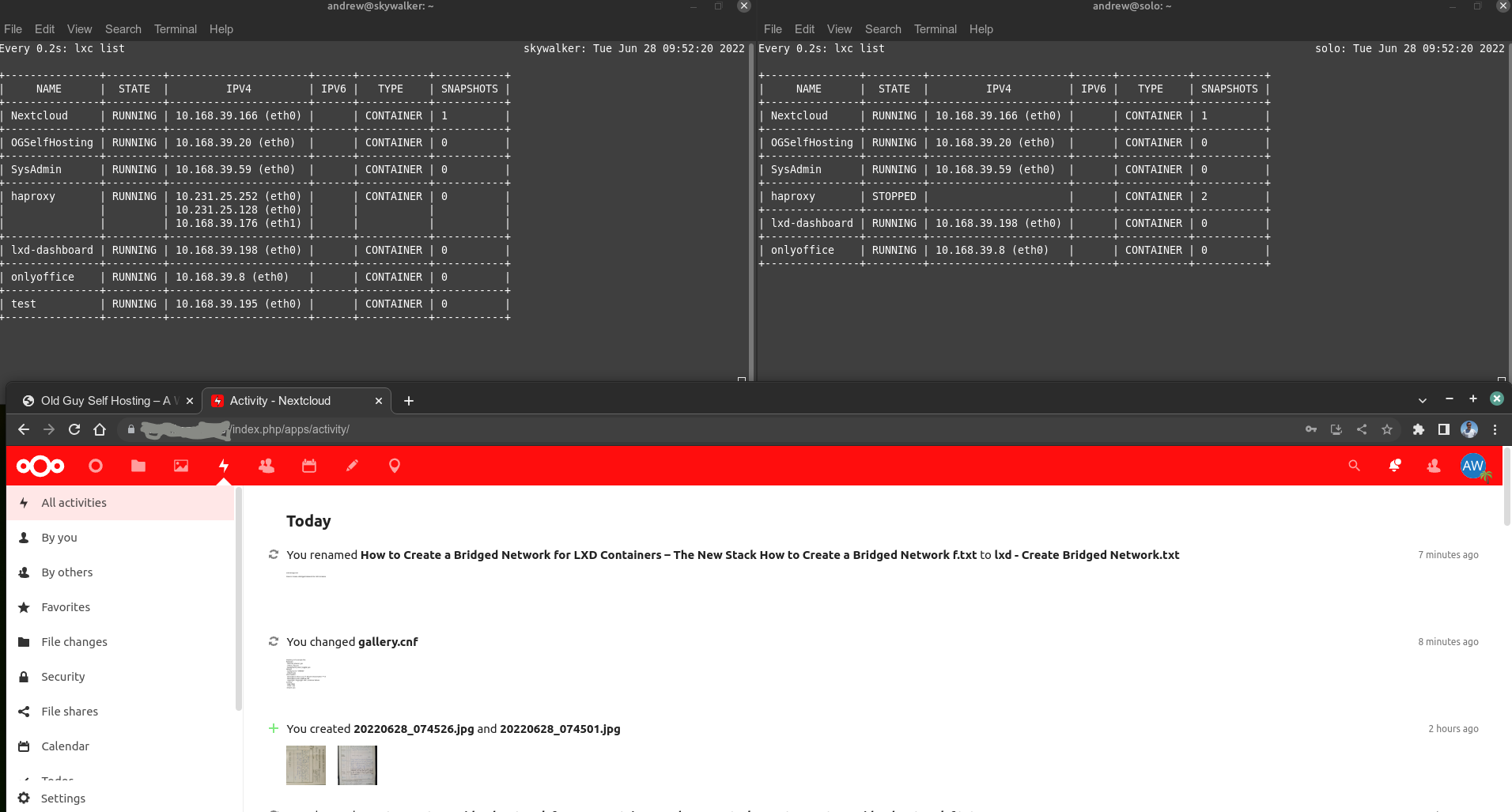

A couple of additional details – I actually hot-backup both my Nextcloud server and a wordpress site I operate. As you can also see from the above image, I also deliberately change the COLOR of my Nextcloud banners (from blue to an unsubtle RED) just to help me realize something is up if my EPYC server goes down since I don’t always pay attention to phone notifications. I only perform a one-way sync, so any changes made to a backup instance will not be automatically regenerated on the primary server as/when it comes back online after a failure. This is deliberate, to reduce making the setup too complicated (which could otherwise not go unpunished!). A pretty useful feature: my ENTIRE Nextcloud instance is hot-copied – links, apps, files, shares, sql daabase, ssl certs, user-settings, 2FA credentials etc. Other than the color of the banner ( and a pop-up notification), the instances are ‘almost identical’*. Lxd provides me with this level of redundancy as it copies everything when you use the refresh mode. Many other backup/fail-over implemetations I have explored in the past do not provide the same level of easy redundency for a turn-key service.

(*) Technically, the two instances can never be truly 100.0000000…% identical no matter how fast you mirror an instance. In my case, there is a user-configurable difference between the primary server and the backup server at the time of the fail-over coming online. I say user-cobfigurable because this is the time delay for copying the differences between server1 and server2. I configure this via the scheduling of the ‘lxc copy –refresh’ action. On a fast network, this can be as little as a minte or two, or potentially even faster. For my use-case, I accept the risk of losing a few minutes worth of changes, which is my maximum risk for the benefit of having a fail-over service. Accordingly, I run my sync script “less frequently” and as of now, it’s a variable I am playing with vs running a copy –refresh script constantly.

If anyone has any interest in more details on how I configure my fail-over service, I’ll be happy to provide details. Twitter: @OGSelfHosting